The case against an AI bubble

Lots of people are, once again, proclaiming the death of AI.

Let’s take step back and look at the big picture.

Every time AI “dies”, there’s an overwhelming narrative driving this idea. The last time this happened, it was when DeepSeek released the R1 model and a narrative went viral on TikTok that China was beating the American AI industry with a fraction of the compute that Americans needed (in that case it’s likely not coincidental that TikTok’s parent company is Chinese).

At the moment, the new narrative is threefold:

GPT-5 failed. it was a hype train but it turned out to be a flop

95% of AI pilot projects at companies are failing

Meta is downsizing their AI and giving up on it

If you just want the tl;dr and wish to move on with your day: none of the above 3 points are true. People are desperate for any headline that tells them AI is failing because many people want AI to fail. The truth doesn’t matter to this very large crowd and they make a lot of noise whenever they have fuel for their narrative.

You can stop here if you want. But I’ll address each item:

“GPT-5 failed”

When GPT-5 first came out, a lot of people were disappointed. It wasn’t as friendly as 4o, and didn’t always think as long as it was supposed to.

People initially claimed it was a dud. But power users had a much more different experience. While most users only had access to GPT-5 fast or thinking, power users usually use GPT-5 Pro.

And that’s the crux of it. Most people do not have access to the best model. But what are people doing with the best model?

Some of them are getting it to literally push the frontier of mathematics forward. Others are getting it to perform complicated agent workflows exactly to the letter of their prompt. And others are getting it to perform novel medical research and reach the same conclusions as unpublished research studies. And others are getting it to just perform incredibly complex code edits from a single prompt.

GPT-5 didn’t fail, but most people can not yet squeeze the juice from the model. However, it has proven that scaling inference-time reasoning works and provides incredible results.

Professionals will continue to use the best, most expensive models to get returns on their investments, and it’s very possible resources reallocate to the top 5% of power users who know how to get productive value from them.

“AI pilot projects at companies are failing”

I just don’t see this as true on the ground. AI projects are taking off across the board. Hundreds of AI-focused apps and agentic workflows are having success. Larger companies are incorporating AI into their internal workflows and products and seeing improved user experience and usage. The fact is that AI works for thousands of practical workflows across a huge swath of software interactions, and people want it.

And let’s not forget the biggest AI project of them all: ChatGPT itself now has 700 million weekly users and growing.

I’ve been seeing professionals use ChatGPT’s agent capabilities first-hand, and it is producing legitimately valuable economic labor. If that tool is able to do that, I know that more specialized and integrated agent workflows are producing incredible productivity returns for people in many places.

Basically, we shouldn’t trust the earliest corporate pilots when developers were only just beginning to figure out how to integrate AI, with much worse models, as an accurate measurement.

“Meta is downsizing their AI and giving up on it”

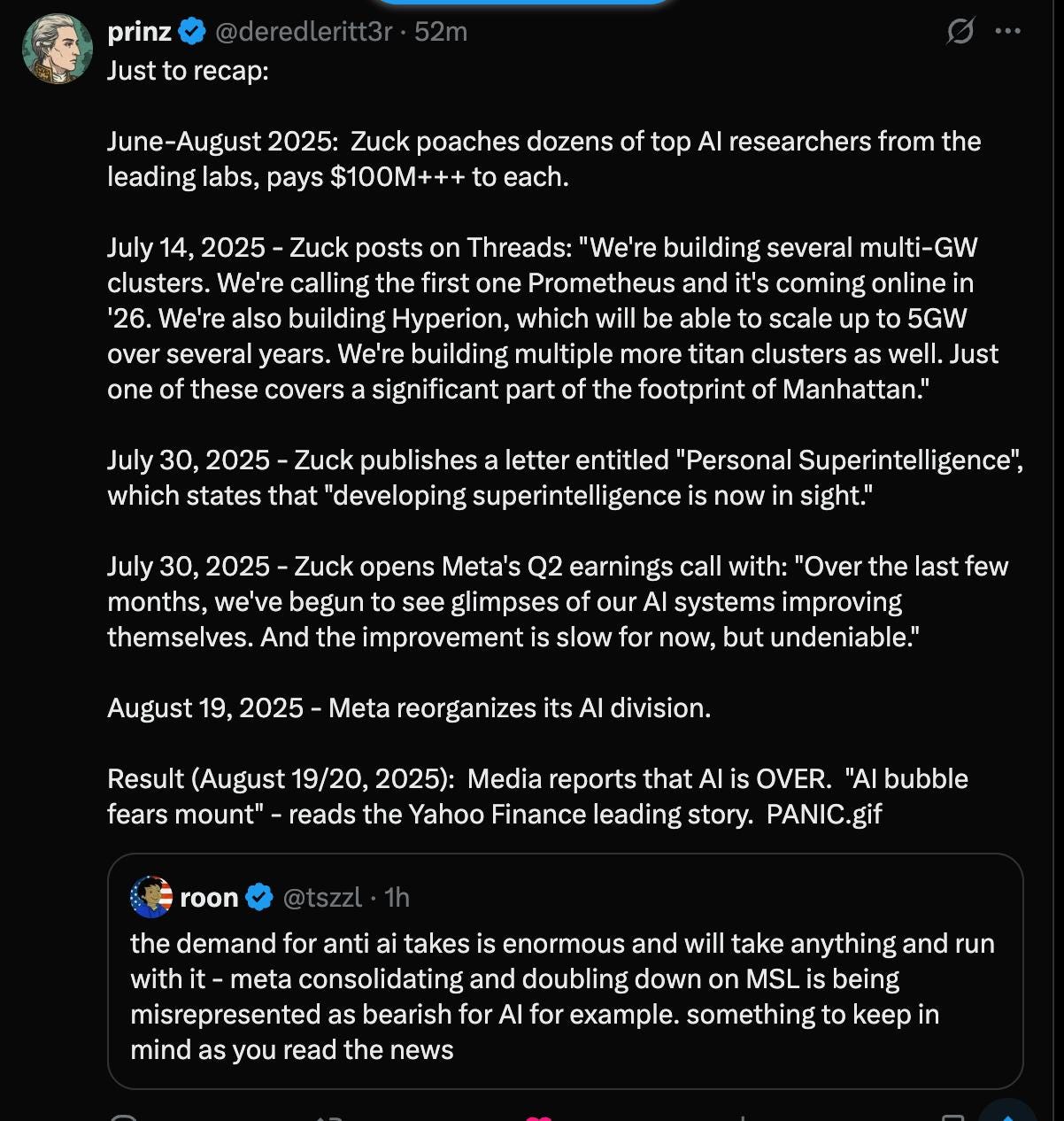

prinz said it best:

Meta is dead serious about superintelligence; they are simply doubling down on Meta Superintelligence Labs and want efforts focused there while shrinking and reallocating other efforts.

So are we entering an AI winter?

Something that I believe less than 1% of people have truly internalized yet is the notion that the singularity is legitimately here.

Perhaps that sounds crazy, but it is a simple principle and one that I don’t believe will lead anyone astray at this point.

The different labs are all finding novel ways to scale, improve, and optimize the intelligence of their models. They all have many more incredible advances coming down the pipe. The notion of an AI winter seems like a major over-dramatization at this point.

But the other important thing to keep in mind is that even if a slowdown were to occur, the AI researchers all now have a massive army of AI agents to scour all the research in the world to help them come up with additional novel neural architecture and training techniques. This is something no researchers had during past AI winters. Any slowdown would not last long.

Just keep in mind moving forward that AI is something that is a gut punch to the human ego and threatens many people’s sources of income. People will continue to wishcast the downfall of AI, and cheer every time they see a headline saying AI is done.

But the people writing the headlines themselves have similar incentives as the reader, and are often not the people experimenting and pushing the frontier enough to accurately report whether AI is failing or not. The whole spectacle is the blind leading the blind, and it is best not to find yourself wrapped up amongst them.